What do you get when you mix artificial intelligence, cloud, and serverless?

You are exactly right! You get a new cognitive open source initiative with the code name “Cyborg”. Cyborg is a representative of Software Defined Senses (SDSens) (first introduced as part of this project).

Currently this open source project and overall is pretty young and We have a vision of expanding it further whether myself or with the help of the community (assuming interest).

As we all know human has five core senses: sight, hearing, smell, taste, and touch.

Today personal assistants (like Alexa, but not limited to that) have ability to hear, and starting to develop (early stages) intelligence to think like humans (certainly far from singularity for sure), however the rest of the important senses are still missing which will be developed as part of SDSens.

The goal of this Cyborg project and broader scope of SDSens is introduce full set of senses starting with the sight.

What is the vision?

At the final stages once this project achieves more maturity, We envision its first physical incarnation in some form of hardware architecture that combines personal assistant (or a robot) with “embedded” camera(s) where camera(s) becomes eye(s) of the Cybog. Certainly adding more senses in the future would be possibility as well as expansion of SDSens vision.

The opportunities are obvious and endless anywhere from healthcare, security (personal as well as national), entertainment, child care, etc. etc. etc.

How did it start?

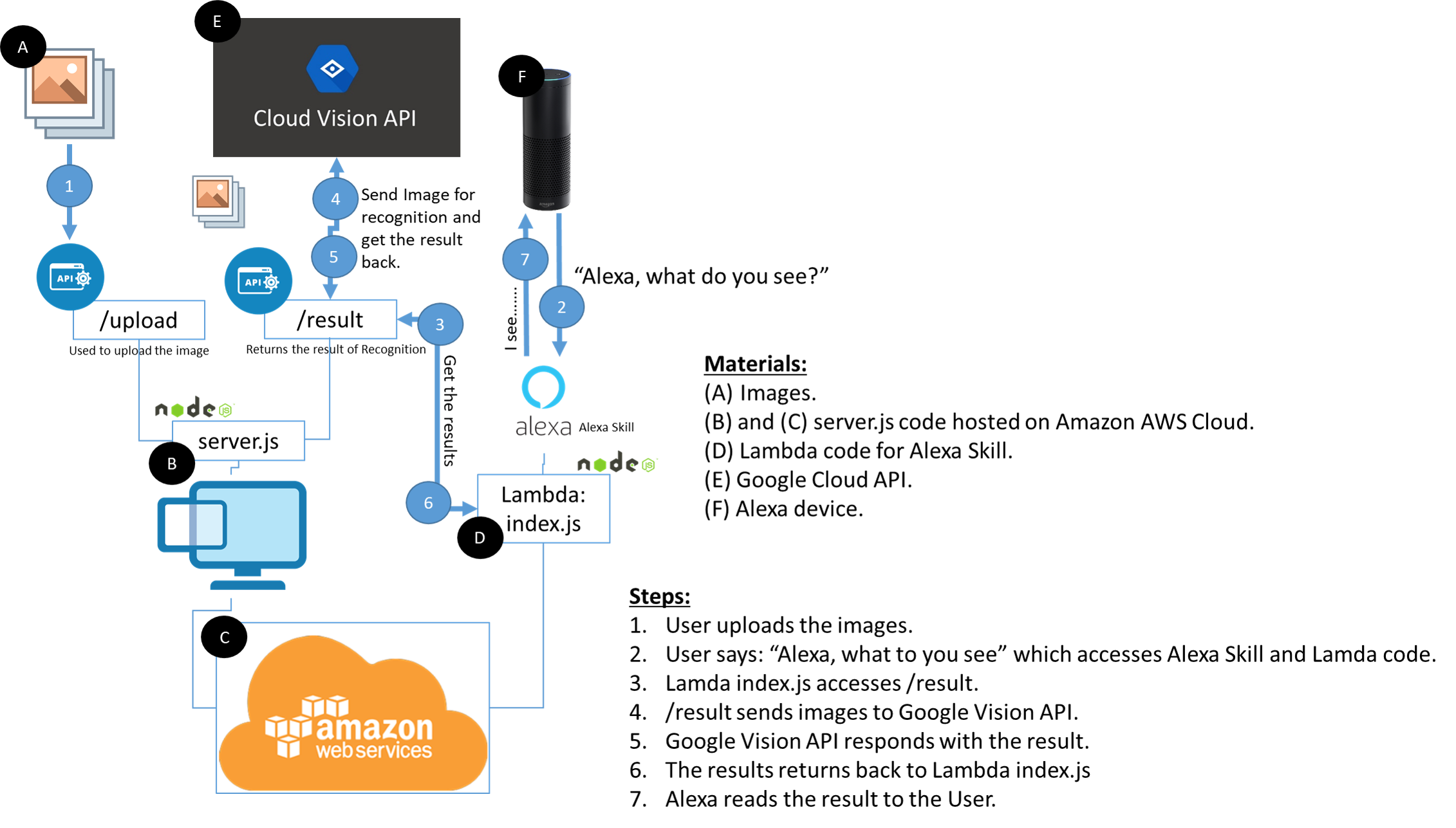

This project was started as a Science Fair project for Cross Roads Elementary School in Irmo, SC (https://www.lexrich5.org/cris) by Dan (http://dan.techdozor.org). Main focus of this project is to introduce a new Alexa Vision Skill that can recognize images. This project results into two independent but connected projects:

- project Cyborg which is a framework to interact with the personal assistant (like Alexa). This project is implemented in nodejs and hosted using AWS Lambda service. https://github.com/techdozor/cyborg

- Vision API project is the one that provides the core image (future video) recognition. https://github.com/techdozor/visionapi

Both of the project are hosted on github and open source under MIT license.

After success of the Science Fair project, myself and group of common thinkers (including Dan mentioned above) decided to start working on the expansion of the project and SDSens as a whole.

We are looking forward to expand this project with the help of the community as well as introduce the new movement SDSens which would build software senses for the robots.

How does it work?

Below diagram provides the high-level overview of the internals of the process.

Personal Observation Note

As a side note this project gave me a great exposure and familiarity with cloud services that are being offered by AWS and Google which include but not limited to TensorFlow, aws cloud primitives (IaaS), containers, lambda services, AI services, etc.

I have to admit, that I am very impressed with the maturity of such offering and as a developer, manager, and executive have to reason to see of developing any software on premise anymore.

There is no doubt in my mind that cloud will win majority and if not to take over everything in the next 10 years.

Take Cyborg for a spin yourself!